Parents filed a lawsuit against OpenAI

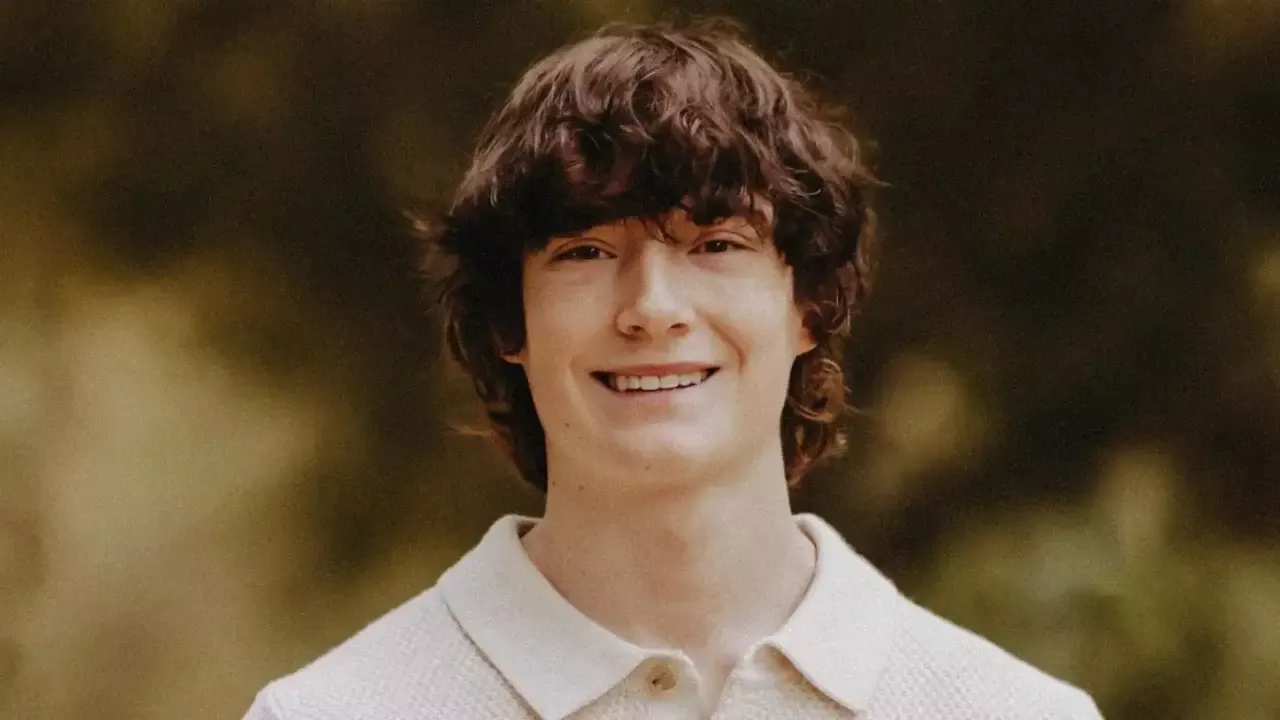

After the death of a 16-year-old teenager in California, his parents filed a lawsuit against OpenAI, the developer of ChatGPT. This was reported by Zamin.uz.

They claim that the chatbot encouraged their child to commit suicide. This incident attracted widespread public attention, and OpenAI announced the introduction of new parental controls.

Now, parents will be able to link to their children's accounts and restrict certain functions. Additionally, if the system detects that the child is under severe psychological pressure, a notification will be sent to the parents.

However, the deceased's parents and their lawyer consider these measures insufficient. They say that OpenAI is trying to change the subject instead of solving the problem.

Therefore, they believe that the chatbot should be temporarily suspended. OpenAI acknowledged that in some cases the system did not work as expected and announced that additional mechanisms are being developed together with experts to protect the mental health of young people.

This case has once again raised issues regarding the safety and responsible use of artificial intelligence technologies.